“The successful launch of Mission Mars has enhanced the interest of common

people in space mysteries. There are many unsolved, unknown stories asteroids

in the space. I have gone through many such true findings using NASA and other

sources. The present story covers presence of a new asteroid near our own earth”

We had a craze for the Moon. We now have a

craze for Mars. Do we miss something? The asteroid belt? Simply speaking, it

consists of non-spherical uninteresting blocks of rocks which float around

between the earth and the sun, not worth a travel or mention. Fret not, because

something large roams between those rocks, which is spherical in shape and as

much is a Dwarf Planet.

As it has always been, I started following

the NASA Dawn space probe[1],

launched in 2007 to take a peek into Ceres, our relatively lesser known dwarf

planet neighbour residing in a the unstable asteroid belt between the Earth and

our new affair Mars. It has taken the satellite 7.5 years to reach its

destination, and it will spend the next 14 months mapping the diminutive world.

The $473 million US Dawn mission is the first to target two different

celestial objects to better understand how the solar system evolved. It's

powered by ion propulsion engines, which provide gentle yet constant

acceleration, making it more efficient than conventional rocket fuel. With its

massive solar wings unfurled, it measures about 20 metres, the length of a

tractor-trailer.

It is the only dwarf planet in the inner

Solar System and the only object in the asteroid belt known to be unambiguously

rounded by its own gravity! So much so, by closer observations it looks just

like a miniature Luna, our moon. Now, is it a coincidence that Ceres has a

surface area roughly equal to our country India? Ceres is the largest object in

the asteroid belt. The mass of Ceres has been determined by analysis of the

influence it exerts on smaller asteroids. Results differ slightly between

researchers. The average of the three most precise values as of 2008 is

9.4×1020 kg. With this mass Ceres comprises about a third of the estimated

total 3.0 ± 0.2×1021 kg mass of the asteroid belt.

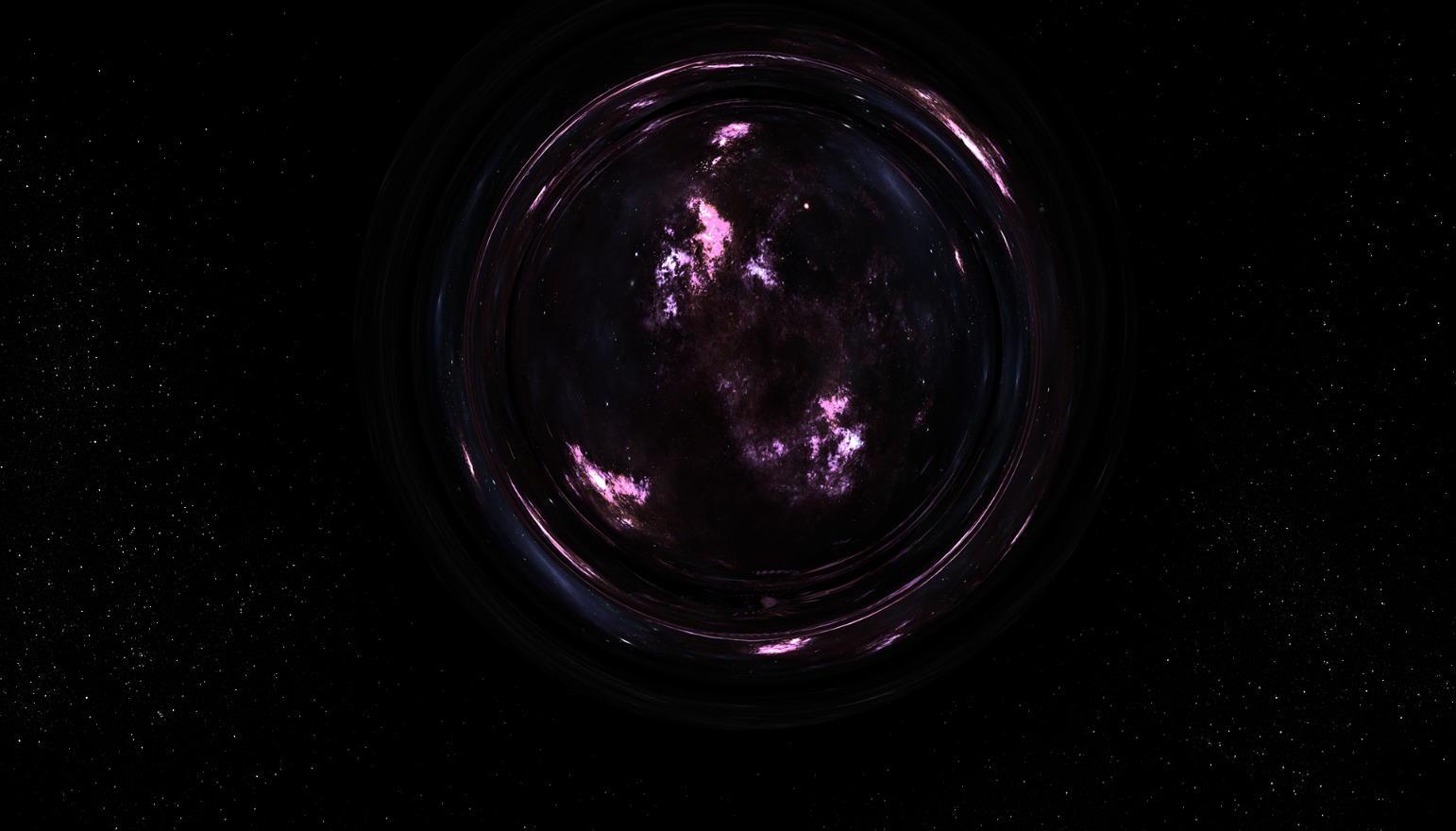

This image was taken on 25th of February

2015

Dawn space probe will study Ceres for 16

months. At the end of the mission, it will stay in the lowest orbit indefinitely

and it could remain there for hundreds of years.

The first thing that comes to a laymen’s

mind is the question of habitability. Although not as actively discussed as a

potential home for microbial extraterrestrial life as Mars, Titan, Europa or

Enceladus, the presence of water ice has led to speculation that life may exist

there, and that hypothesized ejecta

could have come from Ceres to Earth.

The recent look into Ceres have answered

many questions, but raised a lot more. Researchers think Ceres' interior is

dominated by a rocky core topped by ice that is then insulated by rocky lag

deposits at the surface. A big question the mission hopes to answer is whether

there is a liquid ocean of water at depth. Some models suggest there could well

be. The evidence will probably be found in Ceres' craters which have a muted

look to them. That is, the soft interior of Ceres has undoubtedly had the

effect of relaxing the craters' original hard outline.

One big talking point has dominated the

approach to the object: the origin and nature of two very bright spots seen

inside a 92km-wide crater in the Northern Hemisphere. I speculate that those

bright spots are some kind of icy cryogenic volcanos which sound cool enough to

me (pun intended).

I believe that Ceres will eventually tell

us something about the origins of our solar system. In ancient astronomical

times, Ceres was on its way to form a planetary embryo and would have merged

with other objects to form a terrestrial planet, but the evolution was stopped

somehow and its form was kept intact.

We will have more of Ceres soon, as the

Chinese are planning their own mission in the next decade. I hope India will

follow suit…..

References

2.

http://www.bbc.com/news/science-environment-31754586ecraft-nasa/24485279/

4.

NASA Jet Propulsion Laboratory

raw data

[1] NASA Dawn Probe is space craft for analysing asteroid belt.

[1] The author is perusing engineering in Electronics and

Communications from Manipal University, Jaipur. He is keen observer of space

research and technology. He may be contacted at sankrant.chaubey@gmail.com

[1] NASA Dawn Probe is space craft for analysing asteroid belt.